Ucertify offers free demo for Databricks-Certified-Data-Engineer-Associate exam. "Databricks Certified Data Engineer Associate Exam", also known as Databricks-Certified-Data-Engineer-Associate exam, is a Databricks Certification. This set of posts, Passing the Databricks Databricks-Certified-Data-Engineer-Associate exam, will help you answer those questions. The Databricks-Certified-Data-Engineer-Associate Questions & Answers covers all the knowledge points of the real exam. 100% real Databricks Databricks-Certified-Data-Engineer-Associate exams and revised by experts!

Online Databricks Databricks-Certified-Data-Engineer-Associate free dumps demo Below:

NEW QUESTION 1

A data engineer has a single-task Job that runs each morning before they begin working. After identifying an upstream data issue, they need to set up another task to run a new notebook prior to the original task.

Which of the following approaches can the data engineer use to set up the new task?

- A. They can clone the existing task in the existing Job and update it to run the new notebook.

- B. They can create a new task in the existing Job and then add it as a dependency of the original task.

- C. They can create a new task in the existing Job and then add the original task as a dependency of the new task.

- D. They can create a new job from scratch and add both tasks to run concurrently.

- E. They can clone the existing task to a new Job and then edit it to run the new notebook.

Answer: B

Explanation:

To set up the new task to run a new notebook prior to the original task in a single-task Job, the data engineer can use the following approach: In the existing Job, create a new task that corresponds to the new notebook that needs to be run. Set up the new task with the appropriate configuration, specifying the notebook to be executed and any necessary parameters or dependencies. Once the new task is created, designate it as a dependency of the original task in the Job configuration. This ensures that the new task is executed before the original task.

NEW QUESTION 2

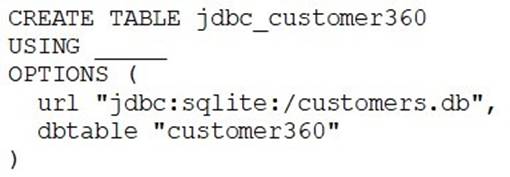

A data engineer needs to create a table in Databricks using data from their organization’s existing SQLite database.

They run the following command:

Which of the following lines of code fills in the above blank to successfully complete the task?

- A. org.apache.spark.sql.jdbc

- B. autoloader

- C. DELTA

- D. sqlite

- E. org.apache.spark.sql.sqlite

Answer: A

Explanation:

CREATE TABLE new_employees_table USING JDBC

OPTIONS (

url "<jdbc_url>",

dbtable "<table_name>", user '<username>', password '<password>'

) AS

SELECT * FROM employees_table_vw https://docs.databricks.com/external-data/jdbc.html#language-sql

NEW QUESTION 3

Which of the following benefits is provided by the array functions from Spark SQL?

- A. An ability to work with data in a variety of types at once

- B. An ability to work with data within certain partitions and windows

- C. An ability to work with time-related data in specified intervals

- D. An ability to work with complex, nested data ingested from JSON files

- E. An ability to work with an array of tables for procedural automation

Answer: D

Explanation:

Array functions in Spark SQL are primarily used for working with arrays and complex, nested data structures, such as those often encountered when ingesting JSON files. These functions allow you to manipulate and query nested arrays and structures within your data, making it easier to extract and work with specific elements or values within complex data formats. While some of the other options (such as option A for working with different data types) are features of Spark SQL or SQL in general, array functions specifically excel at handling complex, nested data structures like those found in JSON files.

NEW QUESTION 4

A new data engineering team team has been assigned to an ELT project. The new data engineering team will need full privileges on the table sales to fully manage the project.

Which of the following commands can be used to grant full permissions on the database to the new data engineering team?

- A. GRANT ALL PRIVILEGES ON TABLE sales TO team;

- B. GRANT SELECT CREATE MODIFY ON TABLE sales TO team;

- C. GRANT SELECT ON TABLE sales TO team;

- D. GRANT USAGE ON TABLE sales TO team;

- E. GRANT ALL PRIVILEGES ON TABLE team TO sales;

Answer: A

NEW QUESTION 5

A data engineer has a Python variable table_name that they would like to use in a SQL query. They want to construct a Python code block that will run the query using table_name.

They have the following incomplete code block:

(f"SELECT customer_id, spend FROM {table_name}")

Which of the following can be used to fill in the blank to successfully complete the task?

- A. spark.delta.sql

- B. spark.delta.table

- C. spark.table

- D. dbutils.sql

- E. spark.sql

Answer: E

NEW QUESTION 6

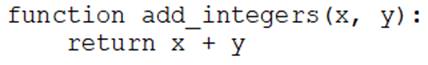

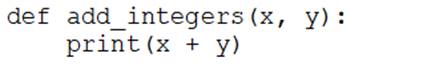

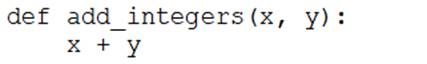

A data engineer that is new to using Python needs to create a Python function to add two integers together and return the sum?

Which of the following code blocks can the data engineer use to complete this task?

A)

B)

C)

D)

E)

- A. Option A

- B. Option B

- C. Option C

- D. Option D

- E. Option E

Answer: D

Explanation:

https://www.w3schools.com/python/python_functions.asp

NEW QUESTION 7

Which of the following benefits of using the Databricks Lakehouse Platform is provided by Delta Lake?

- A. The ability to manipulate the same data using a variety of languages

- B. The ability to collaborate in real time on a single notebook

- C. The ability to set up alerts for query failures

- D. The ability to support batch and streaming workloads

- E. The ability to distribute complex data operations

Answer: D

Explanation:

Delta Lake is a key component of the Databricks Lakehouse Platform that provides several benefits, and one of the most significant benefits is its ability to support both batch and streaming workloads seamlessly. Delta Lake allows you to process and analyze data in real-time (streaming) as well as in batch, making it a versatile choice for various data processing needs. While the other options may be benefits or capabilities of Databricks or the Lakehouse Platform in general, they are not specifically associated with Delta Lake.

NEW QUESTION 8

Which of the following describes when to use the CREATE STREAMING LIVE TABLE (formerly CREATE INCREMENTAL LIVE TABLE) syntax over the CREATE LIVE TABLE syntax when creating Delta Live Tables (DLT) tables using SQL?

- A. CREATE STREAMING LIVE TABLE should be used when the subsequent step in the DLT pipeline is static.

- B. CREATE STREAMING LIVE TABLE should be used when data needs to be processed incrementally.

- C. CREATE STREAMING LIVE TABLE is redundant for DLT and it does not need to be used.

- D. CREATE STREAMING LIVE TABLE should be used when data needs to be processed through complicated aggregations.

- E. CREATE STREAMING LIVE TABLE should be used when the previous step in the DLT pipeline is static.

Answer: B

Explanation:

The CREATE STREAMING LIVE TABLE syntax is used when you want to create Delta Live Tables (DLT) tables that are designed for processing data incrementally. This is typically used when your data pipeline involves streaming or incremental data updates, and you want the table to stay up to date as new data arrives. It allows you to define tables that can handle data changes incrementally without the need for full table refreshes.

NEW QUESTION 9

A data engineer has realized that the data files associated with a Delta table are incredibly small. They want to compact the small files to form larger files to improve performance.

Which of the following keywords can be used to compact the small files?

- A. REDUCE

- B. OPTIMIZE

- C. COMPACTION

- D. REPARTITION

- E. VACUUM

Answer: B

Explanation:

OPTIMIZE can be used to club small files into 1 and improve performance.

NEW QUESTION 10

A data engineer has realized that they made a mistake when making a daily update to a table. They need to use Delta time travel to restore the table to a version that is 3 days old. However, when the data engineer attempts to time travel to the older version, they are unable to restore the data because the data files have been deleted.

Which of the following explains why the data files are no longer present?

- A. The VACUUM command was run on the table

- B. The TIME TRAVEL command was run on the table

- C. The DELETE HISTORY command was run on the table

- D. The OPTIMIZE command was nun on the table

- E. The HISTORY command was run on the table

Answer: A

Explanation:

The VACUUM command in Delta Lake is used to clean up and remove unnecessary data files that are no longer needed for time travel or query purposes. When you run VACUUMwith certain retention settings, it can delete older data files, which might include versions of data that are older than the specified retention period. If the data engineer is unable to restore the table to a version that is 3 days old because the data files have been deleted, it's likely because the VACUUM command was run on the table, removing the older data files as part of data cleanup.

NEW QUESTION 11

A data engineer has joined an existing project and they see the following query in the project repository:

CREATE STREAMING LIVE TABLE loyal_customers AS SELECT customer_id -

FROM STREAM(LIVE.customers) WHERE loyalty_level = 'high';

Which of the following describes why the STREAM function is included in the query?

- A. The STREAM function is not needed and will cause an error.

- B. The table being created is a live table.

- C. The customers table is a streaming live table.

- D. The customers table is a reference to a Structured Streaming query on a PySpark DataFrame.

- E. The data in the customers table has been updated since its last run.

Answer: C

Explanation:

https://docs.databricks.com/en/sql/load-data-streaming-table.html Load data into a streaming table

To create a streaming table from data in cloud object storage, paste the following into the query editor, and then click Run:

SQL

Copy to clipboardCopy

/* Load data from a volume */

CREATE OR REFRESH STREAMING TABLE <table-name> AS SELECT * FROM STREAM

read_files('/Volumes/<catalog>/<schema>/<volume>/<path>/<folder>')

/* Load data from an external location */

CREATE OR REFRESH STREAMING TABLE <table-name> AS

SELECT * FROM STREAM read_files('s3://<bucket>/<path>/<folder>')

NEW QUESTION 12

In which of the following scenarios should a data engineer use the MERGE INTO command instead of the INSERT INTO command?

- A. When the location of the data needs to be changed

- B. When the target table is an external table

- C. When the source table can be deleted

- D. When the target table cannot contain duplicate records

- E. When the source is not a Delta table

Answer: D

Explanation:

With merge , you can avoid inserting the duplicate records. The dataset containing the new logs needs to be deduplicated within itself. By the SQL semantics of merge, it matches and deduplicates the new data with the existing data in the table, but if

there is duplicate data within the new dataset, it is inserted.https://docs.databricks.com/en/delta/merge.html#:~:text=With%20merge%20%2C

%20you%20can%20avoid%20inserting%20the%20duplicate%20records.&text=The%20dat aset%20containing%20the%20new,new%20dataset%2C%20it%20is%20inserted.

NEW QUESTION 13

A data analyst has created a Delta table sales that is used by the entire data analysis team. They want help from the data engineering team to implement a series of tests to ensure the data is clean. However, the data engineering team uses Python for its tests rather than SQL.

Which of the following commands could the data engineering team use to access sales in PySpark?

- A. SELECT * FROM sales

- B. There is no way to share data between PySpark and SQL.

- C. spark.sql("sales")

- D. spark.delta.table("sales")

- E. spark.table("sales")

Answer: E

Explanation:

https://spark.apache.org/docs/3.2.1/api/python/reference/api/pyspark.sql.SparkSession.tabl e.html

NEW QUESTION 14

Which of the following data workloads will utilize a Gold table as its source?

- A. A job that enriches data by parsing its timestamps into a human-readable format

- B. A job that aggregates uncleaned data to create standard summary statistics

- C. A job that cleans data by removing malformatted records

- D. A job that queries aggregated data designed to feed into a dashboard

- E. A job that ingests raw data from a streaming source into the Lakehouse

Answer: D

NEW QUESTION 15

Which of the following code blocks will remove the rows where the value in column age is greater than 25 from the existing Delta table my_table and save the updated table?

- A. SELECT * FROM my_table WHERE age > 25;

- B. UPDATE my_table WHERE age > 25;

- C. DELETE FROM my_table WHERE age > 25;

- D. UPDATE my_table WHERE age <= 25;

- E. DELETE FROM my_table WHERE age <= 25;

Answer: C

NEW QUESTION 16

A data analyst has developed a query that runs against Delta table. They want help from the data engineering team to implement a series of tests to ensure the data returned by the query is clean. However, the data engineering team uses Python for its tests rather than SQL.

Which of the following operations could the data engineering team use to run the query and operate with the results in PySpark?

- A. SELECT * FROM sales

- B. spark.delta.table

- C. spark.sql

- D. There is no way to share data between PySpark and SQL.

- E. spark.table

Answer: C

Explanation:

from pyspark.sql import SparkSession spark = SparkSession.builder.getOrCreate()

df = spark.sql("SELECT * FROM sales") print(df.count())

NEW QUESTION 17

......

Recommend!! Get the Full Databricks-Certified-Data-Engineer-Associate dumps in VCE and PDF From 2passeasy, Welcome to Download: https://www.2passeasy.com/dumps/Databricks-Certified-Data-Engineer-Associate/ (New 88 Q&As Version)